✦ Massachusetts Institute of Technology

✥ Qatar Computing Research Institute

Presented at

WWW 2017

Abstract

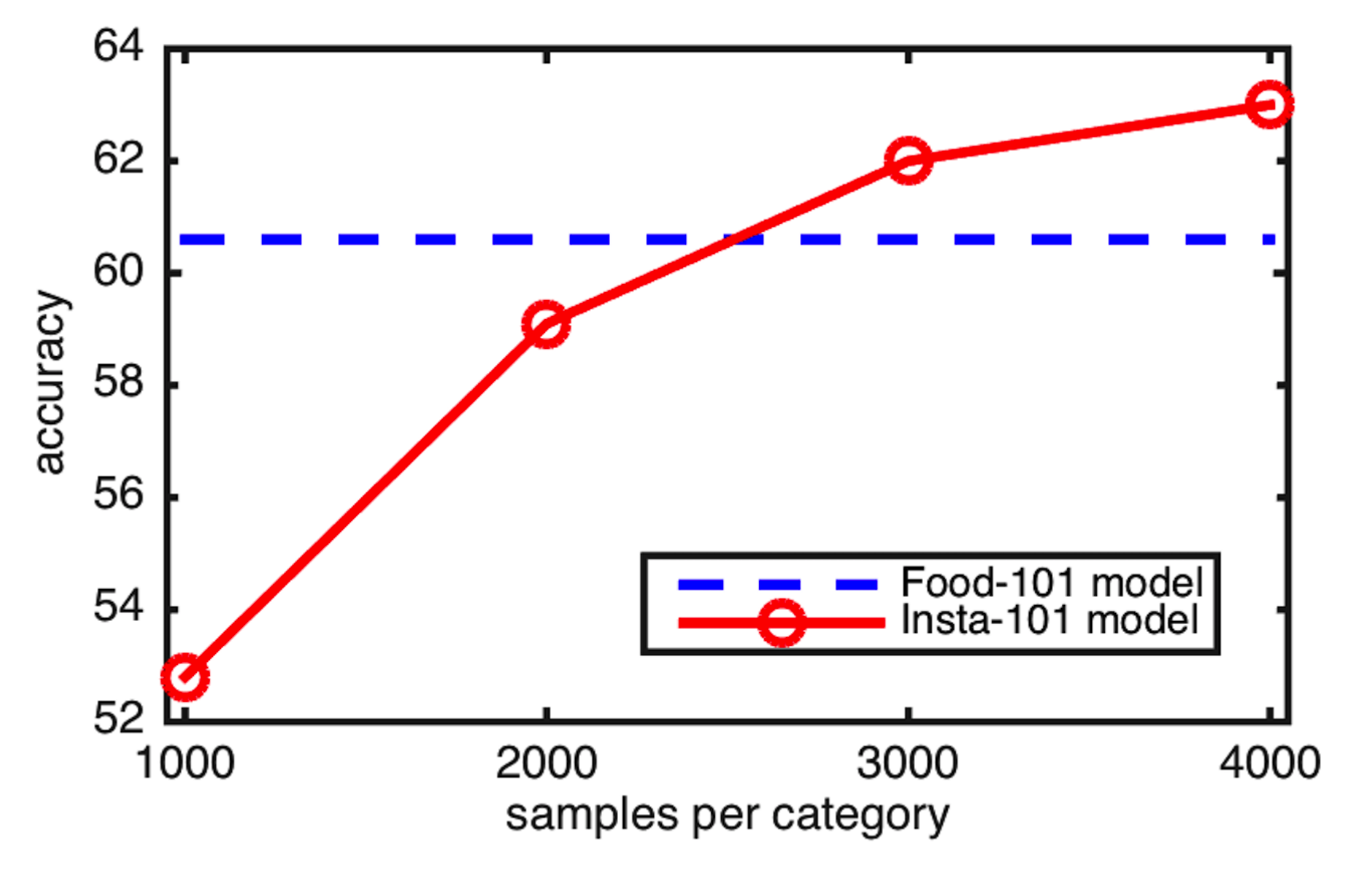

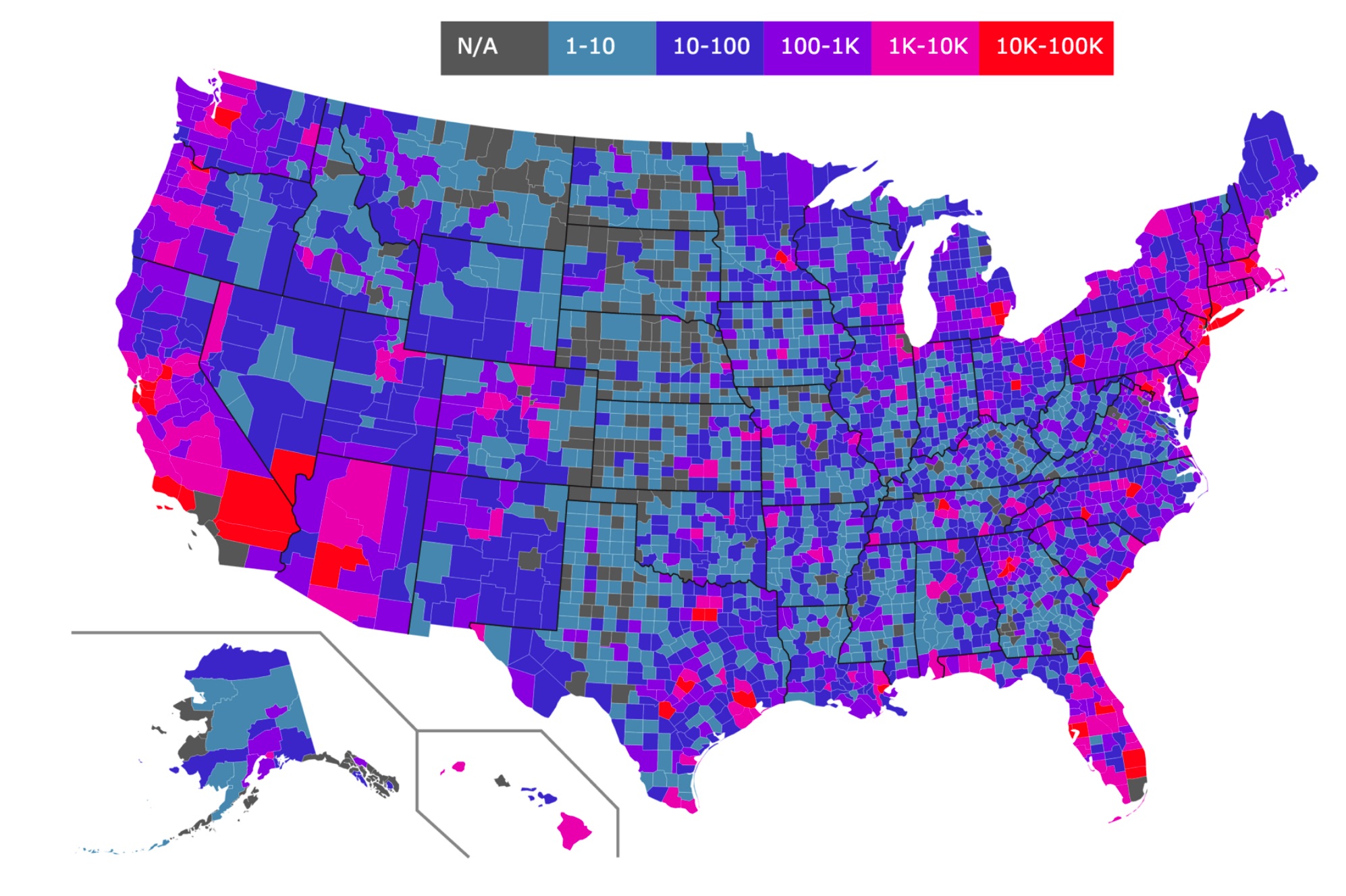

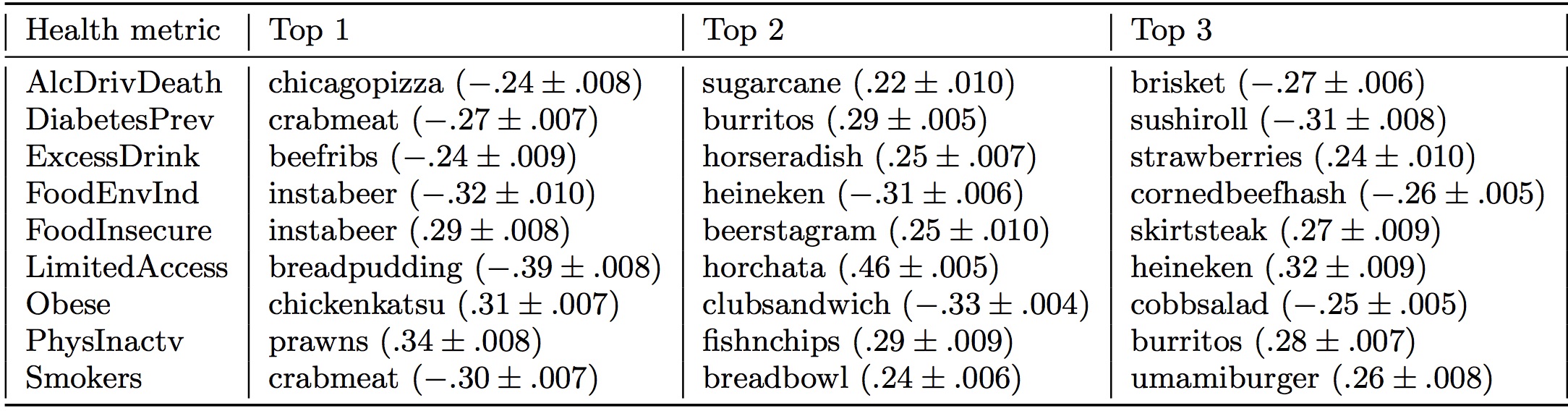

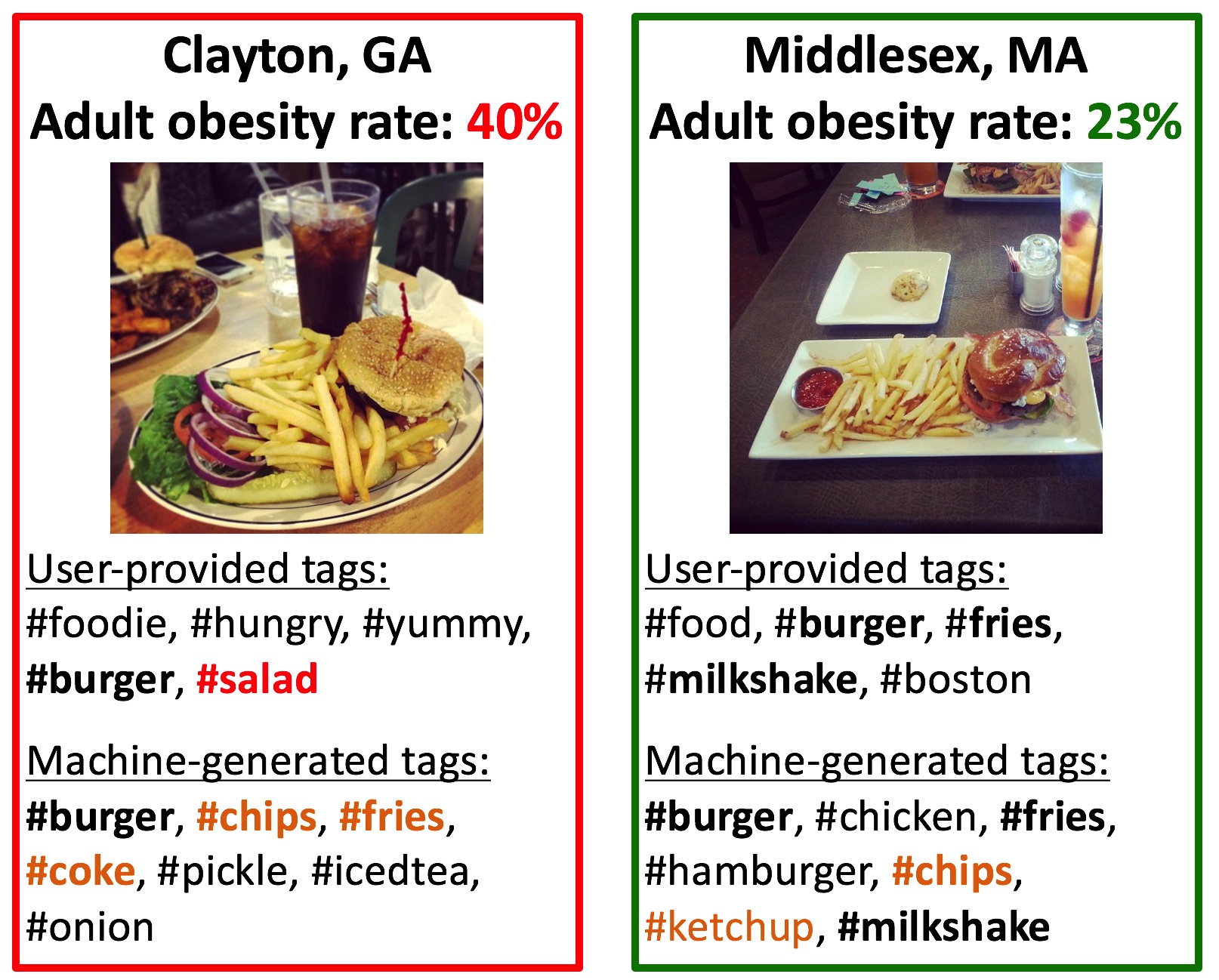

Food is an integral part of our life and what and how much we eat crucially affects our health. Our food choices largely depend on how we perceive certain characteristics of food, such as whether it is healthy, delicious or if it qualifies as a salad. But these perceptions differ from person to person and one person's "single lettuce leaf" might be another person's "side salad". Studying how food is perceived in relation to what it actually is typically involves a laboratory setup. Here we propose to use recent advances in image recognition to tackle this problem. Concretely, we use data for 1.9 million images from Instagram from the US to look at systematic differences in how a machine would objectively label an image compared to how a human subjectively does. We show that this difference, which we call the "perception gap", relates to a number of health outcomes observed at the county level. To the best of our knowledge, this is the first time that image recognition is being used to study the "misalignment" of how people describe food images vs. what they actually depict.

Learning to recognize food from social media images demo

We propose lo learn food classifiers from noisy images collected from social media posts (i.e. instagram). These classifiers are used for automated generation of food related hastags for the given food images. We use deep residual networks for food recognition.

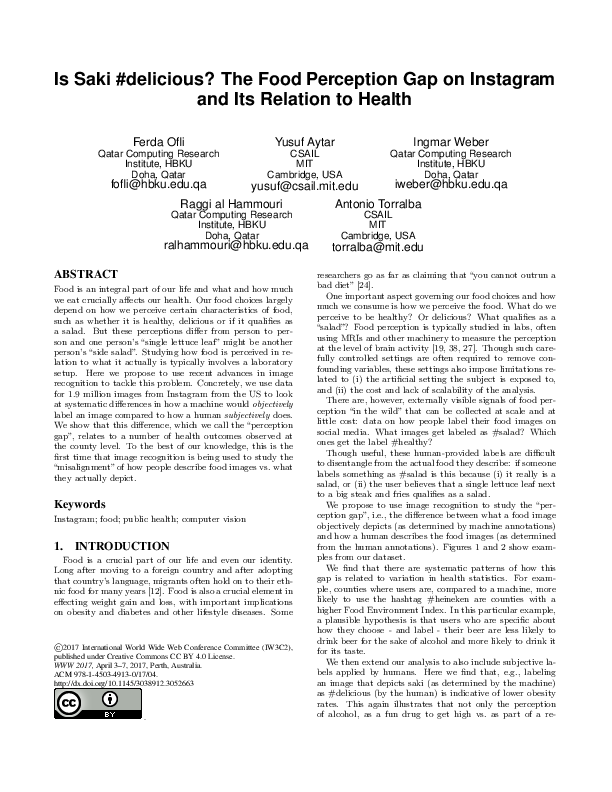

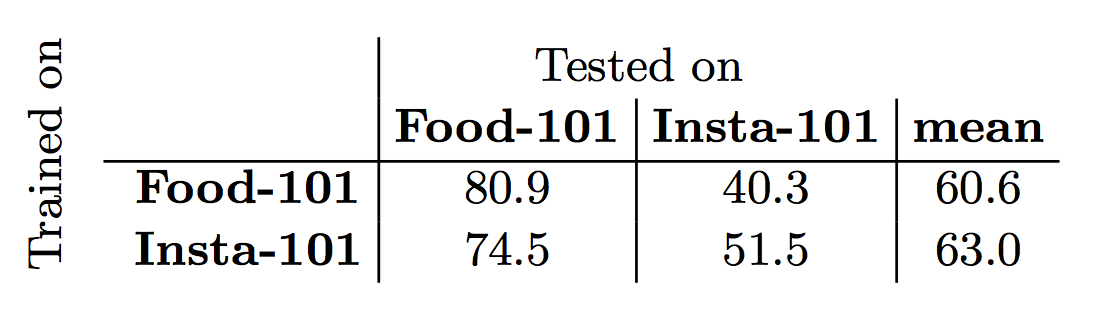

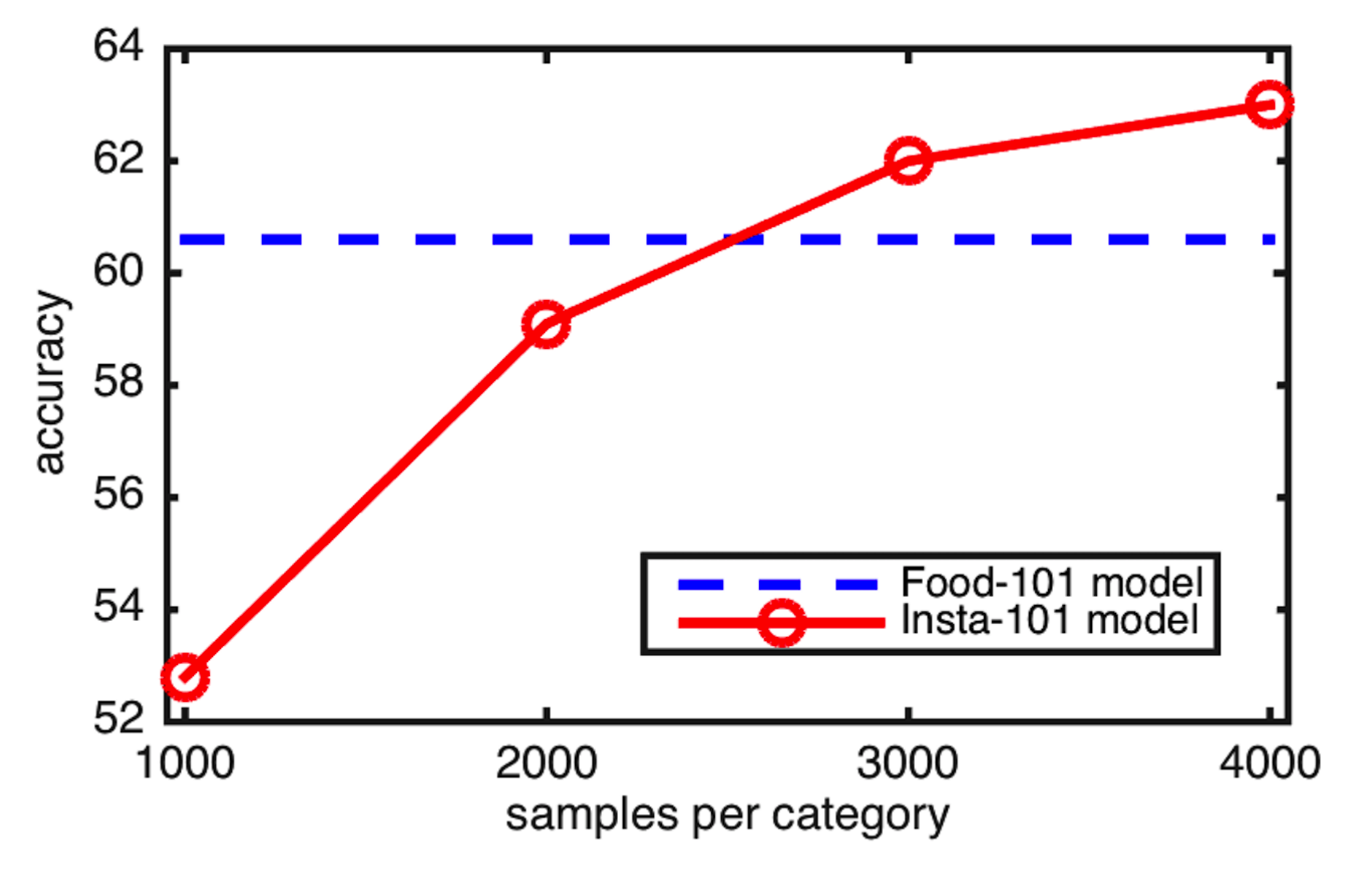

Below is the performance comparision of models trained with noisy social media images (insta-101) vs. curated datasets such as Food-101 :

Cross-dataset performance analysis

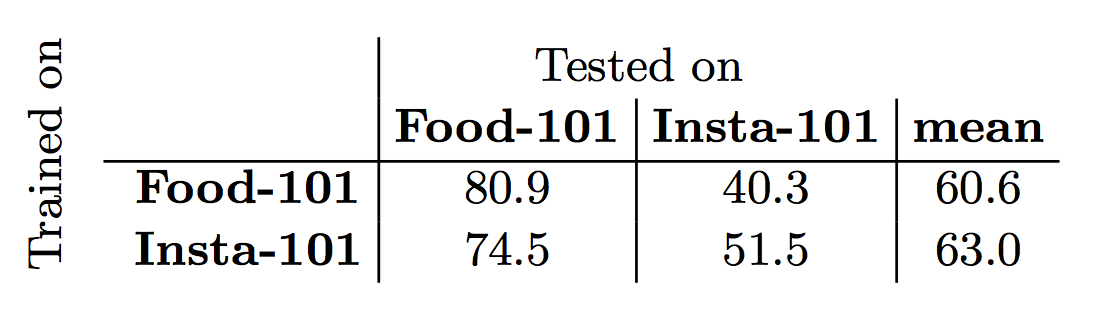

Mean cross-dataset performance of Insta-101 model trained with increasing number of training samples per category. Note that around 2500

samples per category Insta-101 model reaches the

performance of Food-101 model.

| |

Human hashtags: #chomp #modernbritishsteakhouse #steak #beef #weekend #ribeye #bristol #bristolfood

Machine hashtags: #steak #potato #fries #meat #frenchfries #beef #burger #chips #potatoes

An example of machine generated food hashtags

|

Food perception gap

We study the "misalignment" of how people describe food images vs. what they actually depict. We are modeling systematic differences in how a machine would "objectively" label an image compared to how a human "subjectively" does.

Data & Pretrained Models

We relase our food autotagger trained on noisy social media images with the hashtag dictionary of 1170 tags. The model is in caffe format.

- Pretrained food classifier (Resnet-50) & example matlab code for auto-tagging (93 MB tar.gz file)

- Data used in social media analysis (coming soon!)

Citation

@inproceedings{ofli2017saki,

title={Is Saki #delicious? The Food Perception Gap on Instagram and Its Relation to Health},

author={Ofli, F. and Aytar, Y. and Weber, I. and Hammouri, R. and Torralba, A.},

booktitle={Proceedings of the 26th International Conference on World Wide Web},

year={2017},

organization={International World Wide Web Conferences Steering Committee}

}

Acknowledgements

This work has been supported by CSAIL-QCRI collaboration projects.